The leading artificial intelligence company, OpenAI, recently fired and then promptly re-hired its CEO Sam Altman, and switched up its board of directors as a result. Along the way, there have been all sorts of takes about the board’s intentions, the employees’ reasons for threatening to quit and much more. In all of the coverage, I don’t think we have sufficiently discussed what OpenAI’s unusual governance structure means for artificial intelligence development, and for our society.

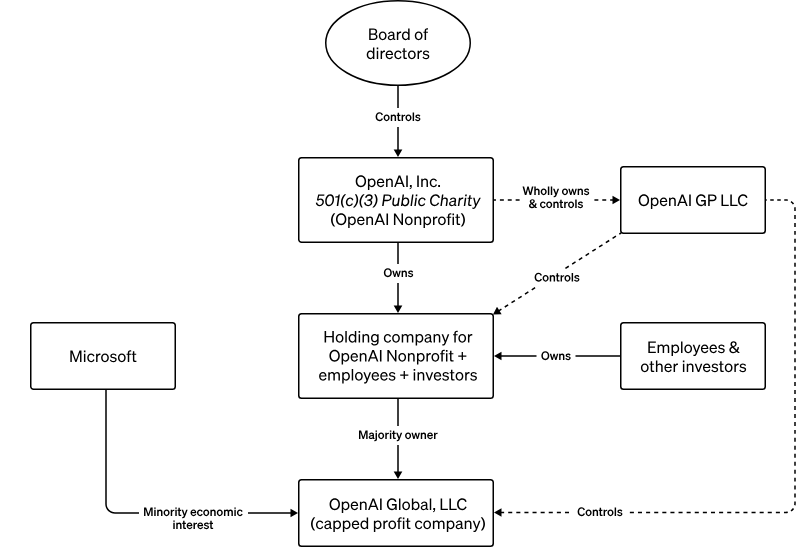

I've been following the saga closely because I work on making investors more comfortable with alternatives to the traditional enterprise governance models. OpenAI is an example of this: the company is controlled by a non-profit 501(c)3, a majority of the directors on the board of the non-profit are independent and don't own OpenAI stock, and "the Nonprofit’s principal beneficiary is humanity, not OpenAI investors."

OpenAI’s structure

At Transform Finance, we see companies that are operated by and for stakeholders (such as workers, consumers, communities, or purposes like the planet) as a crucial piece of the puzzle to build an economy that works for everyone. Over the past few weeks, I've been wondering if what happened at OpenAI will mean investors will be more interested in these mission-oriented structures or more reluctant to back them. What can we learn from this stress test that informs how to promote alternative governance structure?

Example Alternative Ownership Enterprise Models:

My main takeaway is that structure is necessary but not sufficient to protect a company's mission. Individuals, their beliefs, expertise and influence matters hugely as well. Even though the OpenAI board has the official power to fire Sam Altman, Sam Altman, the OpenAI employees and its investors (most notably Microsoft) also hold a lot of power which they promptly flexed to push back against the board's decision. While it may be tempting to think an independent governance structure can help ensure a particular outcome, in reality you may not be able to use the power that the structure technically gives you.

In addition, who holds this governing power matters. Perhaps Board members with savvier public relations skills would have managed the OpenAI situation better? More seriously, the Board members at OpenAI are not representative of the “humanity” they are meant to protect. Given that, can they succeed in their mission?

All of this helps temper my expectations about the "Long-Term Benefit Trust" structure used by Anthropic, one of OpenAI's competitors. Anthropic recently released more information about the governance structure the company has set-up to help safeguard against the potential risks of artificial intelligence, in addition to incorporating as a Public Benefit Corporation. The Trust, governed by external trustees, controls "T-shares” which have unique powers, in particular the power to nominate a majority of the company's board over time (this essentially grants external guardians–instead of investors–control of the company with some exceptions). Given the OpenAI experience, it will be especially interesting to see who is appointed as a trustee.

It's also important to acknowledge the inherent tension at play here. Artificial intelligence development is currently an arms race between many companies in an industry with a "move fast and break things" ethos. Any governance model seeking to prevent risks associated with artificial intelligence faces a challenging context. How do investors feel about putting money into a company that might lose the race because their governance model makes them too slow or reduces potential investor returns? How would they feel if they funded an artificial general intelligence (AGI) that brings about the dystopian visions of the future we have imagined? Overall, is this even a decision that individual investors should be making? Given the risks inherent with artificial intelligence, it does seem like government regulation will also be critical to prevent risks and increase the likelihood the technology benefits all of humanity.

Overall, I’m glad that this governance structure which was designed to benefit everyone, not just investors, did hold up under intense pressure. As of now, Sam Altman is back as CEO with slightly reduced powers (he is no longer on the board), and investors, founders and advocates are much more aware that many options exist when setting-up a company.

For anyone looking for alternative models, Transform Finance recently released a report that outlines and compares 10+ models for setting-up companies that more appropriately balance the rights of shareholders with the rights of the stakeholders who are impacted by a company, and that ensure those affected by its activities have a say in its decisions. Several of those models could be applicable in situations like OpenAI, from foundation ownership to golden shares to perpetual purpose trusts. We're excited to continue to see these models tested in the real world, and to build toward a more just economy, and artificial intelligence solutions that don’t take all of our jobs (or worse).

Written by Julie Menter, Program Director of our Transformative Financing Structures Program. Thanks to Rose Bloomin, Jackie Mahendra and the Transform Finance team for their help with this article.